Risk Tip #2 – how do we measure control effectiveness?

Measuring control effectiveness is difficult for many organisations (if not most). What worries me is how often I come across the ‘guess work’ that goes into measuring control effectiveness when what’s actually needed is evidence to prove the controls in place are right for the resources, budget and risk.

What I find fascinating is that the majority of risk management databases on the market that I have reviewed provide a free text field to list the controls and, usually, a free text field to provide an assessment of effectiveness. So, for instance, there may be six or more controls listed by a company but no meaningful way of assessing the effectiveness of each of those controls individually, preferring instead to provide one effectiveness rating that covers all of the controls. Given that the assessment of likelihood and consequence are going to be made giving due regard to the effectiveness of the controls, this blanket approach to assessing effectiveness may lead to flawed assessments of the risk level.

In order to answer the question as to how we measure control effectiveness, it is worthwhile to go back to the start and define what a control is.

The humble control

Essentially, a control is something that is currently in place to reduce risk within an organisation and/or an industry. They have often been brought in as a result of a previous situation or incident. Note, in many cases these situations or incidents arise, not because of a lack of controls, but because of a failure of existing controls. So the real key to managing risk effectively is to ensure that our controls are effective.

There are three key categories for controls:

- Preventative – controls that aim to reduce the likelihood of a situation occurring, for example, policies and procedures, approvals, authorisations, police checks and training;

- Detective – controls that aim to identify failures in the current control environment, for example, reviews of performance, reconciliations, audits and investigations; and

- Corrective – controls that aim to reduce the consequence and/or rectify a failure after it has been discovered, for example, crisis management plans, business continuity plans, insurance and disaster recovery plans.

As you can see, controls are absolutely crucial in the management of risk.

So, are all controls made equal?

The answer to this question is, obviously, no. What separates those controls that are critical and those that are far less important is the consequence of the risk should it occur.

There are risks within any organisation that, if they were to materialise as an incident, would have serious implications for the ongoing viability and survivability of the organisation (the severe/catastrophic consequences in the consequence matrix). These must be prevented.

The way we do this is through the controls we have in place.

Let’s say we are working at a major hardware chain in the plant import and distribution part of the business. The identified risk is:

release of a plant borne bio hazard (including fauna) into the community

There are a range of controls including (but not limited to):

- fumigation at the country of origin and the country of receipt;

- handling protocols;

- inspection protocols;

- training of staff;

- supervision of staff;

- sufficient breaks to maintain concentration;

- … and the list goes on

The key here is that we want to make sure these controls are as effective as possible as any failure of one or more of the controls could lead to the risk materialising. These controls are critical controls and, therefore, must be the subject of assurance.

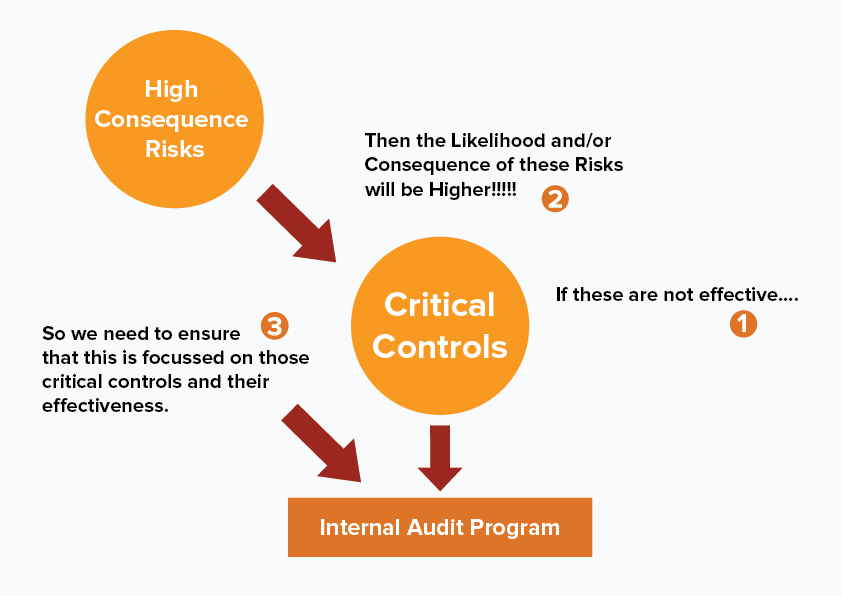

The relationship is shown in the diagram below:

The next step – control criticality

It needs to be recognised that not all of the controls associated with the Severe consequence risk will have the same impact to reduce or maintain the level of the risk. If all of the controls associated with high consequence risks are treated the same, we may commit more resources than are necessary to the assurance function. To that end, assigning criticality to each of the controls will assist in prioritising our audit program.

Here’s a way it can be done:

| Criticality | Descriptor |

| 5 | The control is absolutely critical to the management and reduction of the risk. If this control is ineffective or partially effective, the likelihood and/or consequence of the risk will increase significantly (i.e. increases likelihood or consequence by 3 or more levels) |

| 4 | The control is very important to the management and reduction of the risk. If this control is ineffective or partially effective, the likelihood and/or consequence of the risk will increase (i.e. increases likelihood or consequence by 2 levels) |

| 3 | The control is important to the management and reduction of the risk. If this control is ineffective or partially effective, the likelihood and/or consequence of the risk will increase (i.e. increases likelihood or consequnece by 1 level) |

| 2 | The control has some impact on the management and reduction of the risk. Depending on the criticality of the other controls, an analysis should be undertaken to determine the necessity of this control. |

| 1 | The control has little to no impact on the management and reduction of the risk. It is unlikely this control is required. |

Having identified the controls against the risks with the highest level of consequence and then assessed them for their criticality, we now have a list of controls associated with that risk that, not only need to be effective, but require evidence of effectiveness.

But the biggest question – what does effective look like?

Organisations continue to find it difficult to assess the true effectiveness of the controls. Some will use Control Self Assessments (CSA), however, it is very rare that control effectiveness is measured against performance measures developed specifically for the control.

Let’s use an example of a wharf where the risk has been identified as: Catastrophic material failure of infrastructure. We have identified multiple causes, the first of which is: Lack of/ineffective maintenance. Against this cause, we identify the following controls:

| Preventative/routine maintenance program |

|

| Inspections |

|

For the first control – preventative/routine maintenance, the following performance measures are developed:

| Effectiveness | Performance |

| Effective | 100% of routine maintenance tasks conducted within designated timeframes |

| Mostly Effective | 80-99% of routine maintenance tasks conducted within designated timeframes |

| Partially Effective | 50-79% of routine maintenance tasks conducted within designated timeframes |

| Not Effective | <50% of routine maintenance tasks conducted within designated timeframes |

For the second control – inspections, the following performance measures are developed:

| Effectiveness | Performance |

| Effective | 100% of maintenance inspections conducted within designated timeframes and 100% of issues identified during inspections are rectified within specified timeframes |

| Mostly Effective | 80-99% of maintenance inspections conducted within designated timeframes and 80-99% of issues identified during inspections are rectified within specified timeframes |

| Partially Effective | 50-79% of maintenance inspections conducted within designated timeframes and anything less than 79% of issues identified during inspections are rectified within specified timeframes |

| Not Effective | <50% of maintenance inspections conducted within designated timeframes and anything less than 70% of issues identified during inspections are rectified within specified timeframes |

Provided we actually undertake the measurement of the controls, we can now provide evidence of effectiveness to management. In doing so, we are providing them assurance that the risks with the most significant consequences to the organisation should they materialise, are being effectively controlled. This level of assurance cannot be provided when control effectiveness is guessed rather than assessed.

The three lines of defence of control assurance

Obviously, we don’t need the same level of assurance for all controls – the resource impost would be significant, for questionable value. To that end, we need a methodology that provides the ability to separate those controls that require further assurance from those where Control Self Assessments are sufficient.

The first step in this process is to prioritise the controls. The following matrix can assist:

| Consequence Level (of the risk) |

Criticality of the Control |

Assurance Priority | |

| Severe | 3,4,5 | 1 | It is critical that these controls are effective, therefore, they are the number one priority for the organisation’s audit program. Where possible, external auditing should be utilised to provide further assurance |

| Major | 4,5 | 2 | It is important that these controls are effective, therefore, they are a significant priority for the organisation’s audit program. Where possible, external auditing should be utilised to provide further assurance for the criticality 5 controls |

| Moderate | 5 | 3 | It is relatively important that these controls are effective. There is no requirement for external auditing. |

| 1st Line of Defence | 2nd Line of Defence | 3rd Line of Defence | |

|

Control self-assessment. This involves the control owner making a judgement in relation to the effectiveness of the control. It involves documenting the organisation’s control processes with the aim of identifying suitable ways of measuring or testing each control. |

Line management review or Internal audit of controls against designated performance measures and key performance indicators. Primary focus is on controls against risks with the highest level of consequence. Outcomes of control audits provided to control owner. If any change to effectiveness the risk owner needs to be informed as this may change the level of the risk. |

External audit conducted on specific controls. Once again, the focus needs to be on controls against risks with the highest level of consequence. |

|

| Assurance Priority | Line of Defence to be engaged | ||

| Priority 1 | × | × Internal Audit | × External Audit |

| Priority 2 | × | × Internal Audit | × External Audit |

| Priority 3 | × | × Line Management Review | × Internal Audit |

| All other controls | × | ||

Once prioritised, we can use the following three lines of defence model to assist in developing our audit program:

At the completion of this process we have a prioritised list of controls that inform both our internal and external audit program – a true risk based auditing approach.

Conclusion

To truly manage risk, rather than ‘doing risk management’, it is essential that the control environment within the organisation is effective. We can ill afford, however, to simply estimate (or guess) whether our controls are effective.

So, what do we need to have in place in order to provide the level of assurance to management that the controls relating to their highest consequence risks are effective? For each control we need to identify:

- A control owner

- Performance measures and KPIs

- What effective looks like

Put simply, if you can’t prove your controls are effective, you are not managing your risks.