This is not a drill

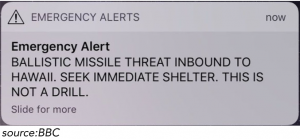

On Saturday 13th January 2018, the Hawaiian Emergency Management Agency (HEMA) sent out a missile alert:

What followed were scenes of absolute fear, confusion and chaos as Hawaiians sought shelter where they could: under bridges, in underground shelters, with video even emerging of a father lowering his child into a drain so she would be shielded from any blast.

Problem was, it wasn’t a real threat and it wasn’t even supposed to be a drill – just a test of the internal system.

A full panic filled 38 minutes later, another message was released:

It later emerged during an extremely difficult press conference given by the Governor and the head of the HEMA that an employee had selected the wrong option on a drop-down menu. It also emerged that, when asked to confirm whether the employee was sure that they wanted the alert to proceed, they had selected yes.A full panic filled 38 minutes later, another message was released:

Shannon Liao from The Verge gives a terrific explanation about what happened. In essence, the alert system came down to a confusing list of choices for the operator. As reported by Liao the list included obscure labels for actions, many of the names of the actions had similar names (and as Liao rightly pointed out “any employee either without proper training or caffeine could click on the wrong link and send off a warning to the public of a ballistic missle threat”)

“On Saturday at 8am, the employee was simply supposed to test the internal missile alert system, without sending it out to the public. The test was run occasionally, but not by any routine schedule”.

“On Saturday, it took officials nearly 40 minutes to correct the false alarm. The FCC (Federal Communications Commission) announced on Sunday that it was investigating the false missile alert incident, citing a lack of “reasonable safeguards or process controls in place,” Liao reported.

So, time to put on the 20/20 lens. I will look at this as an unrealised risk (i.e. what it should have looked like in the organisation’s risk register prior to it occurring). In doing so, I will attempt to highlight how the event may have been avoided.

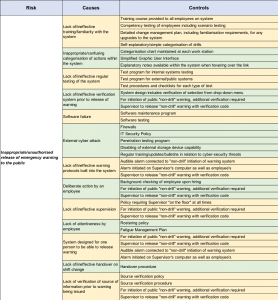

The risk in this case that I would have captured in the risk register is as follows:

Inappropriate/unauthorised release of emergency warning to the public

The first thing to do is to identify the potential causes:

| Risk | Causes |

| Inappropriate/unauthorised release of emergency warning to the public | Lack of/ineffective training/familiarity with the system |

| Lack of/ineffective regular testing of the system | |

| Lack of/ineffective verification system prior to release of warning | |

| Software failure | |

| External cyber attack | |

| Lack of/ineffective warning protocols built into the system | |

| Deliberate action by an employee | |

| Lack of/ineffective supervision | |

| System designed for one person to be able to release warning | |

| Lack of attentiveness by employee | |

| Lack of/ineffective handover on shift change | |

| Lack of verification of source of information prior to warning being issued |

Although not completely familiar with the controls that would be/should be in place, below is an overview of what some of those controls might/should have been (stressing once again that these are an outsider/layman’s view of the organisation):

Full version here.

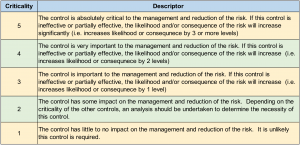

From what is in the public domain, I identified some effectiveness measures for the controls and, where appropriate, identified where it appears that controls were not in place. I have also included a criticality rating (once again a judgement call on my behalf) using the following matrix:

Full version here.

So, what does our risk register look like with all of this information included?

Full version here.

Once the controls have been assessed for effectiveness, we can then assess the likelihood the risk will occur using the likelihood rating system I have developed. This likelihood matrix uses control effectiveness to determine likelihood rather than time and/or frequency – a much more meaningful measure of how likely an incident is to occur, and is shown below:

| Ratings | Descriptors |

| Almost Certain | Less than 10% of the critical controls associated with the risk are rated as either Effective or Mostly Effective. Without control improvement, it is almost certain that the risk will eventuate at some point in time. |

| Likely | 10-30% of the critical controls associated with the risk are rated as either Effective or Mostly Effective. Without control improvement, it is more likely than not that the risk will eventuate. |

| Possible | 30-70% of the critical controls associated with the risk are rated as either Effective or Mostly Effective and, if there is no improvement the risk may eventuate. |

| Unlikely | 70-90% of the critical controls associated with the risk are rated as either Effective or Mostly Effective. The strength of this control environment means that it is more than likely that the risk eventuating would be caused by external factors not known to the Agency. |

| Rare | 90% or more of the critical controls associated with the risk are rated as either Effective or Mostly Effective. The strength of this control environment means that, if this risk eventuates, it is most likely as a result of external circumstances outside of the control of the Agency. |

On that basis, having conducted what is, I admit, a rudimentary assessment of control effectiveness based only on information in the public domain, I would have assessed this risk prior to the incident occurring somewhere between Likely and Almost Certain.

If it was in the risk register of the Agency, they probably did what all organisations do and assessed the Likelihood based on how many times it had happened before – which, from my research it appears that has never been done. Therefore, the likelihood was probably assessed as Rare or Unlikely. Nobody has been reporting the incident in terms of: well that is the first time in 60 plus years it has happened so that is a pretty good record. Instead, like all these incidents, excellent past performance is not taken into account in terms of the damage to reputation.

The public (and the media in this case) have been very quick to highlight the control shortfalls. There will be an investigation and one or two people may lose their jobs, but, like all incidents that occur in complex organisations, this was a failure of the organisational system – the poor person on duty just happened to be the one that exposed these shortfalls.

So, the big question with the benefit of hindsight: was this foreseeable and, more importantly, was it avoidable?

I will let you be the judge.